Sound From Diagrams and The Vivarium

The Diagram

For a long time now, I have had a habit, or method, of laying out my audio/installation/performance projects by drawing simple, yet detailed, diagrams of all the parts.

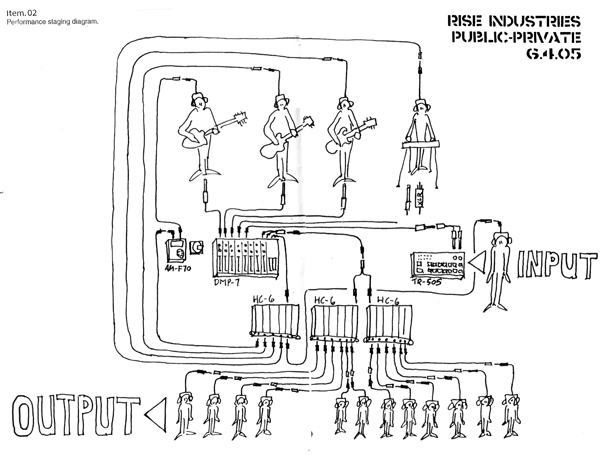

These diagrams would help me to organize the signal flow, parts list and layout for the projects. I would actually use them to figure out how many cables to buy, with what connections, and so on. Often I would start with a looser version, that helps to lay out the conceptual parts of the work, and how the parts are related, which I refine a few times until I am ready to draw every little cord, element, and plug.

For the performances of Public/Private and Local Music I scanned and cleaned up my diagrams and included them in booklets I made for each show. Here is one from Public/Private:

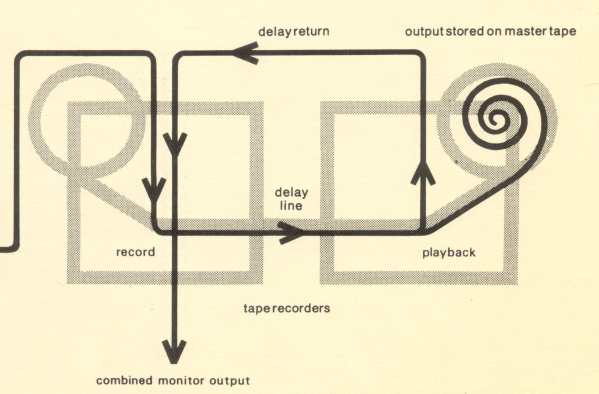

This little diagramming habit got me curious about other sound diagrams, and I dug up some interesting ones out there, including this gem from Brian Eno, showing how his analogue infinite tape loop system for Discreet Music worked.

There are also tons of people around the internet either posting their sound rigs, or diagramming bands set ups, so you can finally find out what kind of hardware they are using, in what configuration, to get that specific sound.

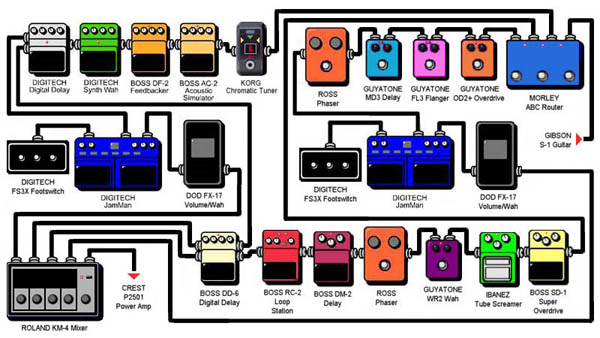

Here is a simple diagram of a guitar rig (found here):

Here is an example of a pedal-board layout, used as a guide for Ronnie Cramer here to build a flight-case mounted effects board.

The diagram is getting more representative here, and this is the prevalent style on the Guitar Geek website, a database of performers’ guitar set ups diagrammed in this fashion by fans. Check out Robert Fripp’s set up here.

While these diagrams are interesting, they are merely graphic representations of the arrangement and connections of the tools of some musicians. They borrow the logic of the circuit diagram, long used to draw out and conceptually test circuits prior to actual constructing them, but keep none of the symbols. It is actually in the symbols that the circuit diagram really gets useful – the ability of the drawing to represent the functions of physical objects in such a precise way that you can actually trouble shoot your circuit from the drawing. I am interested in these properties of the diagram, and the possibilities of using the diagram to structure sound in a more direct way.

The Voice of Saturn synthesizer (used in video below) schematic

Vivarium

Several months ago I got into a conversation with Juan Azulay of Matter Management about Moog synthesizer wiring. I think he had just posted looking for someone who knew how to wire a Moog (I do not), and I responded with some video of a (much simpler) kit synthesizer that I had built.

The legendary Moog

My synth and delay setup

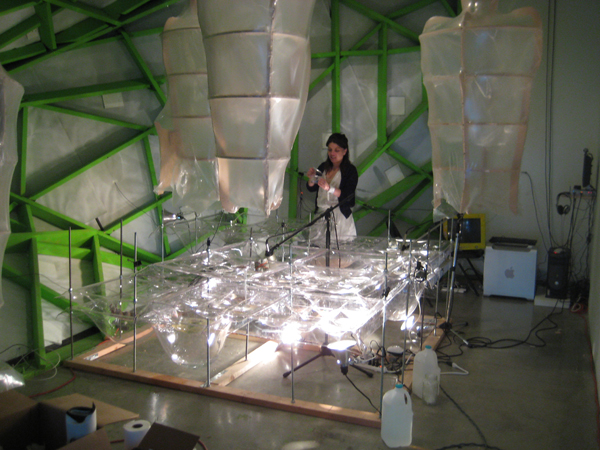

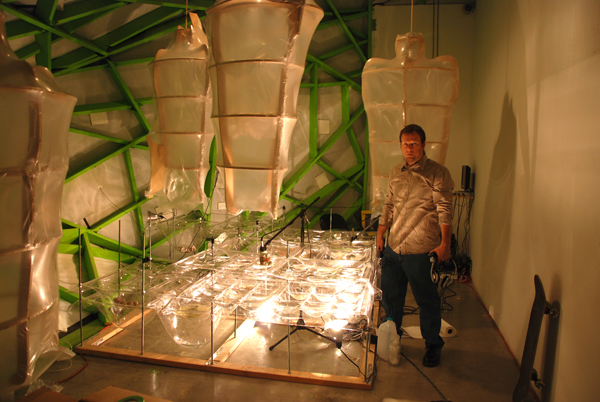

This short exchange led to my joining his Vivarium team as sound designer. For this project, I was vaguely tasked with creating a hybrid bio-electronic synthesizer which would take sensor input from a collection of living organisms and their support systems (light, heat, water), merge them with a set of software based systems, and output sound which was responsive to changes in both the living organisms, the support systems, and the software systems.

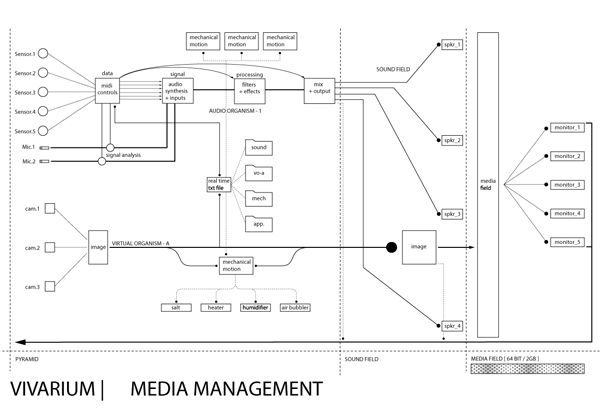

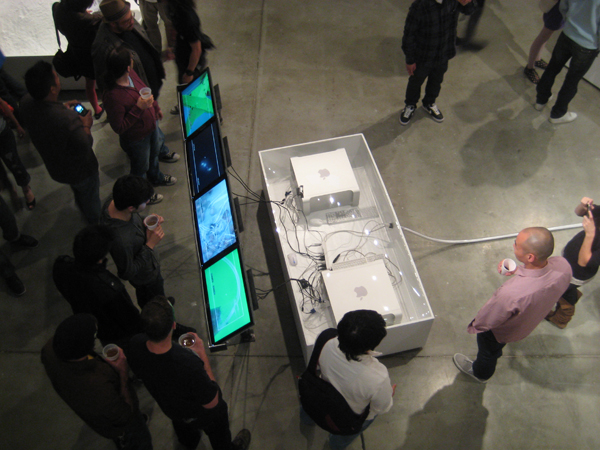

The sound system was to work in parallel with a video system of even greater complexity, created by a media team headed up by Doug Wiganowske. It will be taking input from cameras, feeding that to a series of virtual organisms (built as an evolving software construct by Nicholas Pisca), and merging all of that with video shot during the whole process of making the Vivarium. This is all finally output to a set of monitors in the media field of the final installation.

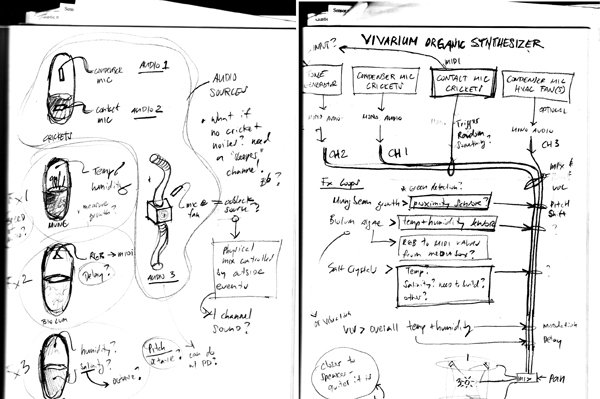

I began the sound design process by diagramming inputs, processes, and relationships that could be set up within this system, based on an assumed list of organisms and support systems. At the same time I began to search for sensors that could take the data we wanted, and translate it to MIDI so I could use it to work with the audio and data signals within the software. At this point I was worried I would have to build these sensors and processors by hand, and was looking to side-step that long process. I also started research into what software would be best for the set up.

The media and sound teams then collaboratively worked out a diagram of all the media for the installation, as a framework from which to develop our systems.

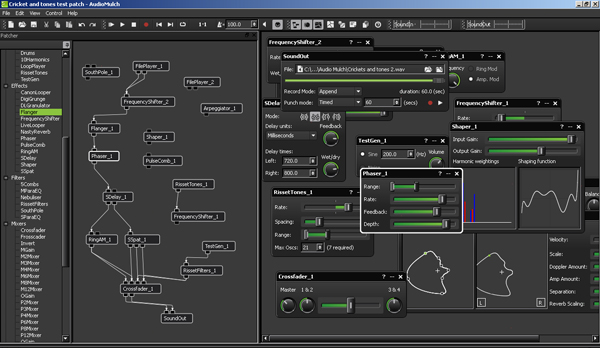

I found a couple of patch-based software packages that would be appropriate for the project, and began working with one of them, Audio Mulch, to develop test patches.

From the Audio Mulch website:

AudioMulch is an interactive musician’s environment for PC and Mac. It is used for live electronic music performance, composition and sound design.

AudioMulch allows you to make music by patching together a range of sound producing and processing modules.

I also found a source for the sensors I needed, an ordered a few so that I could test my input devices with my software patches. The result of this first test (using some of my sounds and a short sample from the band Double).

(video was generated using Akira Rabelais‘ Argeïphontes Lyre software)

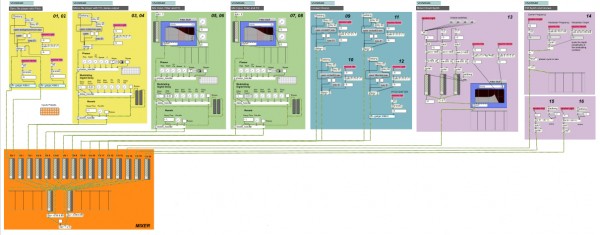

In Audio Mulch, patches are created by objects dragging onto a “patcher” area and connecting them with patch cords. The objects themselves are chosen from a list of various types of audio handlers, generators, or processors. Once a patch has been assembled, in flow-chart diagram fashion where you can actually follow the path the signal takes through the patch cords, then adjustments can be made to each element in the editor panel beside the patcher. The power of this program for me was that each object was able to have midi input assigned to adjust any of its parameters, enabling sensors to be to control almost any element of the sound. Also, the complexity and fidelity of the available objects was quite impressive.

Here, the diagram has become the instrument and sound generator itself, and as I constructed patch diagrams, I was building the software synthesizer the would generate sound from my array of sensors.

After working within this system for many weeks, Juan and the team suggested I look into using MAX/MSP to build my patches. MAX/MSP is also a patch based, flow-chart like software tool, but in contrast to Audio Mulch’s small set of fixed audio objects, MAX/MSP is simply a visual programming environment of limitless application. From the MAX website:

An interactive graphical programming environment for music, audio, and media. Max is the graphical programming environment that provides user interface, timing, communications, and MIDI support. MSP adds on real-time audio synthesis and DSP (digital signal processing), and Jitter extends Max with video and matrix data processing.

While this would open up the sound system to many new possibilities, it would require that I learn a whole programming syntax – quite a bit complex than just using new software with a simple user interface. I set about going through tutorials, taking apart demonstration patches, and building simple sound elements to test what I could and couldn’t learn to do within the time frame of the project.

A simple MAX patch synthesizer from the MAX tutorial pages.

Unlike using Audio Mulch, configuring the sensors to work with MAX/MSP was a challenge at first since it required unraveling the syntax of the sensor manufacturer’s proprietary MAX objects. Once I had figured out all the tricks to get MAX and the sensors to talk, I made a simple light driven MIDI piano patch. You can see in this short video how casting shadows on the sensor will affect the simple MIDI piano sounds being generated randomly through the software.

With the sensors now talking to the software, I compiled an array of individual MAX patches, one for each type of sound or effect I wanted to include in the final sound system. Here I was limited a bit by my new knowledge of MAX, and will continue to refine and add to these patches throughout the duration of the installation. The complexity of the MAX system as compared to my previous Audio Mulch is system is more additive – building many simple elements into a large patch rather then building each element to create more complex sounds.

The modules in the above patch are color-coded by type, and each separated into boxes for clarity. Below them all of the individual audio channels are run into a mixer made up of individual faders and volume displays, then mixed down to the two speaker channels. In the final patch I added a filter at the end of each channel to guard against damaging low frequencies. I also had some help here from Michael Feldman in getting the patches to do what I wanted.

The patch at this point consisted of the following modules:

Stereo file player with sensor controlled pitch (on each stereo channel) and speed

Stereo file player with sensor controlled phasing and delay effects

Microphone input 1 with sensor controlled filter, phasing and delay effects

Microphone input 2 with sensor controlled filter, phasing and delay effects

A chorus of cricket sounds, each with sensor controlled speed (to replicate the acutual crickets that will be in the Vivarium)

A minor chord synthesizer, with the root note created by sensor data, with sensor controlled octave switch and filters

A frequency modulation synthesizer driver by sensor data

And two simple tone generators driven by sensor data

I ran a studio test, using the sensors I had available and the ambient conditions of my loft to control the patch. In the real installation, there is be an array of eight sensors, placed among the biology inside the Vivarium to control the patch.

Last week the final sensors arrived, I made the necessary tweaks to the patch, and spent several days installing the whole system while the Vivarium was being completed around me.

The Vivarium officially opened on March 26th with a small SCI-Arc reception, but over the next two weeks we will continue to refine the systems on site, getting everything optimized for a public reception and talk (between Matter Management’s Juan Azulay and SCI-Arc’s director Eric Owen Moss) on April 9th. During this time, I will also be working on getting the whole sound system to broadcast live over the web.

Matter Management’s Vivarium Installation is currently on display at SCI-Arc‘s Gallery.